Demystifying Kubernetes: Exploring the Key Components of Container Management

Ever faced a daunting task, like orchestrating a move, landing a job, or crafting a gourmet dinner? Imagine having an organizer who thoughtfully broke the work down into manageable steps. Someone who allocated tasks efficiently to you and others based on how much work you could handle. Might the daunting task have been easier?

Welcome to Kubernetes, a work orchestrator. Like a chef in a kitchen, conductor in an orchestra, or manager on a sales team, Kubernetes orchestrates work in technology applications.

Since Kubernetes’s inception by Google in 2014, it has taken the technology world by storm. The OpenSource system has grown to millions of users, fueled in part by technology companies shifting toward micro-services.

Interested in understanding how Kubernetes coordinates containers and networks to become a prominent container orchestrator? We’ll break down the key components of Kubernetes and how each component plays into the container management process.

But first: what is container management?

Container Management

What are containers and container managers?

To understand container management, it is best to start with containers. Containers provide a consistent and portable environment to run applications. The encapsulation that containers provide benefits the development, deployment and testing of applications by providing a consistent and isolated environment.

As the name implies, container managers manage containers. This management includes creating, controlling the communication between, and destroying containers.

What are the benefits of using container managers?

Container managers have 4 key benefits we’ll highlight:

Scale applications up and down quickly

Container managers can scale up a large amount of resources consistently and quickly. By scaling applications up or down quickly, container managers enable efficient resource utilization and faster application response times.

Provide a consistent testing environment

The control container managers have over deployments enhances the reliability and flexibility of software deployments, empowering technology teams to more easily push and test changes.

Increase developer productivity

Automating the management of containers allows developers to focus on other things, improving productivity.

Build complex applications

Container managers allow for more complex applications to be seamlessly deployed. This supports the micro-services architecture many technology firms are moving towards.

Brendan Burns, the co-founder of Kubernetes, summed up this feature container management (orchestration) unlocks:

“Orchestration is sort of that next layer up that allows people to not just deploy their individual applications but actually sort of integrate their applications together in a way that they can coordinate and build bigger systems.” - Co-Founder of Kubernetes Brendan Burns

Keeping in mind the value container managers provide, we will dive into the components of a popular container manager: Kubernetes.

Kubernetes Components

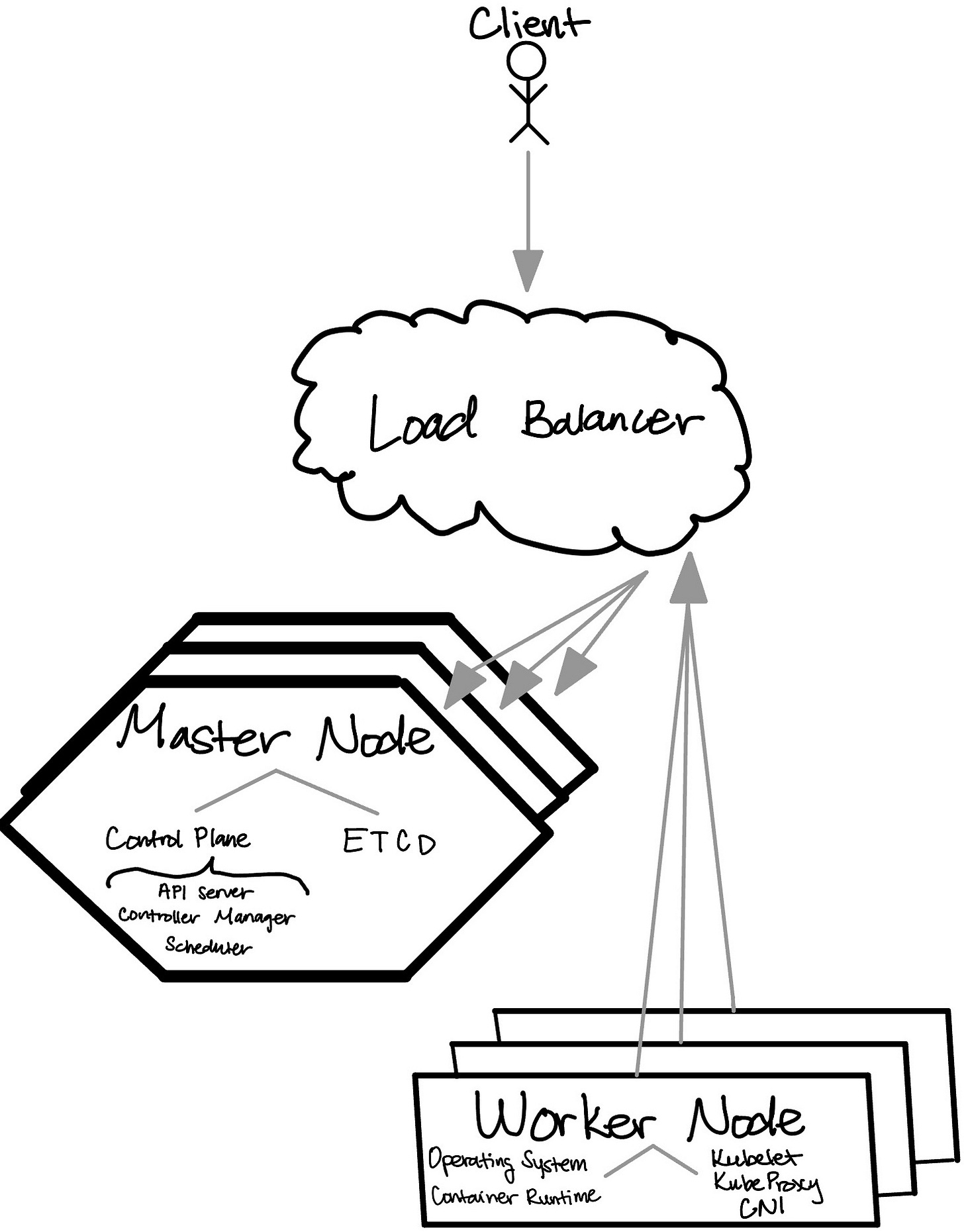

To deploy, scale and handle networking for containers, a Kubernetes cluster contains several different components, each with a unique purpose. These components are the load balancer, master nodes and worker nodes. The load balancer handles traffic from external sources to the cluster, the master nodes manage the cluster state and delegate work, and the worker nodes execute work. This relationship can be visualized below.

Additional optional components can be useful depending on the use case of your Kubernetes cluster. All of these components are discussed in greater detail below.

Load Balancer

To avoid one master node being overwhelmed with requests while others are idle, the load balancer distributes requests across healthy master nodes as evenly as possible. To effectively distribute load, there are three functions the load balancer performs:

Determining which master nodes are healthy by sending health checks

Distributing requests to only healthy master nodes

Scaling the number of master nodes based on load

There are two types of load balancers available in Kubernetes: external and internal. Check out A Complete Guide to Kubernetes Load Balancers to learn more about the benefits of using one load balancer type vs another.

Master Nodes

The master nodes can be thought of as the leaders of the cluster. Each master node manages and coordinates the cluster operations sent to it from the load balancer.

The recommended amount of master nodes is 3, that way the cluster has the required amount of nodes needed to establish quorum (2) plus a backup node. However, the amount of master nodes might scale to more than this depending on cluster load. We will discuss quorum more in the ETCD section below.

There are two key parts to the master node: the control plane and the ETCD server. The control plane manages API requests, controls the worker nodes, and schedules work while the ETCD server stores the cluster state.

Control Plane

Everything outside storing the cluster state is managed by the control plane. The control plane contains the API server, controller manager and scheduler, which manage API requests, worker nodes, and scheduling work respectively.

API Server

The API server exposes the Kubernetes API, allowing users and other components to interact with the cluster. The main purpose of the API server is to receive, process and respond to external requests sent to the master node the API server is run on.

Controller Manager

The controller manager monitors the state of the cluster, ensuring that the desired state matches the actual state. It manages various controllers for tasks like scaling, replication and self-healing.

Scheduler

The scheduler assigns pods to available worker nodes based on resource requirements, constraints and other policies.

Note: pods are deployment units that are groups of one or more containers used to run an application.

ETCD

ETCD contains a distributed key-value store that stores the cluster’s state along with configuration data. The data store is highly available and uses quorum to ensure data consistency across master nodes.

Quorum is the process of a majority of master nodes agreeing on the cluster state. It is essential clusters reach quorum at all times. Quorum provides data consistency across all nodes within the cluster.

Worker Nodes

As the name describes, the worker nodes are the nodes that do the actual execution work. There are two main roles for the worker node: perform the application work and handle network traffic.

Worker nodes host and manage the execution of containerized applications based on work scheduled by the master nodes. Upon completion, the worker nodes send the work results back to the master nodes.

There are five key parts to the worker node: the OS, container runtime, KubeProxy, Kubelet and CNI. The OS and container runtime play crucial roles in supporting the running of an application to perform the scheduled work while the KubeProxy, Kubelet and CNI are involved in supporting the node’s internal and external networking.

Additional Components

Additional components include DNS services, Dashboards, Ingress Controllers and Persistent Storage.

DNS

DNS can be set up to support container communication based on container name rather than an IP address within the cluster. Using container names rather than IP addresses can make internal communications more readable for developers.

Dashboards

Kubernetes Dashboards provide a web-based interface to monitor and manage clusters. To interact with the cluster outside the terminal setting, I recommend using Dashboards, as this can also help visual learners understand what is going on within the cluster.

Ingress Controllers

The Ingress Controller can be used to configure and manage ingress rules. Ingress Controllers are useful if you wish to configure access to services within the cluster by external services.

Persistent Storage

Persistent storage provides a way to store application data that is accessible across containers. This can be especially useful if you have a lot of dependencies used by your containers, and you want to make your containers lighter to spin up. By moving dependencies into persistent storage, containers can access the same dependencies without having to download those various dependencies upon each container creation. Instead, the dependency is installed once on the persistent storage, and the persistent storage is mounted to all applicable containers.

Conclusion

Kubernetes has become increasingly popular with industry shifts towards containerization, micro-services, and cloud technology. It is one of my favorite technologies to work with due to the collaborative community it has built, thorough documentation, and elegant system design. If this blog sparked your interest, check out this tutorial that walks you through creating a Kubernetes cluster from scratch and provides more details about the components discussed above.