The Evolution of Search: How the search space has transformed and what the use cases are for each innovation

From keyword search to retrieval augmented generation: what are the search models behind different search engines, and how to choose which search implementation fits your customers' needs

Flash back to 100 years ago: you need to look up a question and what do you do? Flip open an encyclopedia? Spend hours parsing books at the library? Inevitably give up? Fast forward to 2024: you can ask chatbots complex questions and receive concise answers in a matter of seconds. Improvements in search have transformed day-to-day life. This didn’t happen all at once. Innovations were made along the way changing how we store, compare and present search results.

Curious about the key innovations made in search and the options that exist today to choose from when constructing your search engine? We’ll dive into these below.

Keyword search

Keyword search might be similar to how a toddler searches for something: look for documents containing the same, or close to the same, word. We can enhance keyword search by allowing for fuzziness (minor variations in letters), configuring synonyms, and using statistical measures like term frequency-inverse document frequency.

Term frequency-inverse document frequency, or TF-IDF, scores unique words higher.

Keyword search works great for cases where your customers will be searching for terms that you can expect will have exact matches in your backend. But if your use case requires contextual understanding of the relationship between user search queries and information in your backend, this isn’t your best option.

Semantic search

Semantic search brings contextual meaning into search. This gives search engines a new way to compare words: vectors. Vectors empower us with a way to mathematically represent the meaning of a word or group of words. From there we can compare how related the meaning of two word groups are by comparing the cosine similarity of the two vectors.

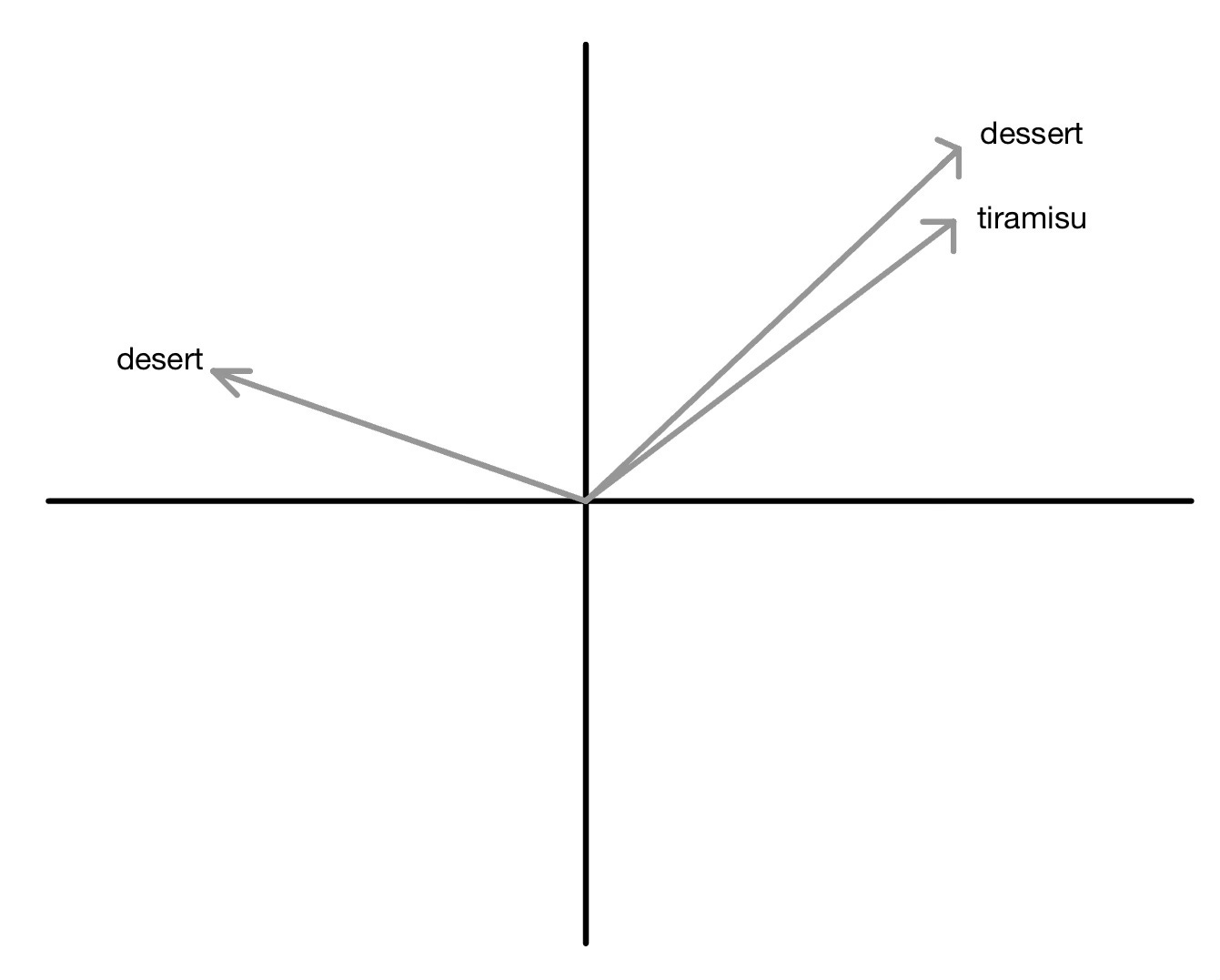

For example, “desert” and “dessert” have similar spelling with drastically different meanings. On the contrary, “tiramisu” and “dessert” are not similar in word construction but are very much related - tiramisu is a fantastic dessert. The diagram below shows how we can represent the relationships between “desert”, “dessert” and “tiramisu” through vectors.

Vector search was invented back in the 1950s but really took off in 2021 with the adoption of vector databases like Elasticsearch, Pinecone and Milvus. With neural plugins available for vectorization, setting up a vector store to support a semantic search engine has never been easier.

Now we have an approach to search by meaning, and an approach to search alphanumerically. But what if we want to do both?

Hybrid Search

Hybrid search can give you the best of both worlds: account for keyword similarity and semantic meaning. This approach can require some exploration as the right strategy needs to be found for combining keyword and semantic search for your application.

Keyword and semantic search produce different ranges of scores for search results, so comparing the relevance scores of these different search techniques is like comparing apples and oranges. Therefore, when you configure hybrid search, you can specify how to fuse the two scores, allowing customization of how much the “meaning” of a word impacts results.

Between keyword, semantic, and hybrid search, we have a lot of ways to customize how we compare a search query to documents. But what if users are searching with complex queries, or want a succinct answer rather than a list of matched documents? This is where large language models become useful.

Large Language Models

Large language models can be used for processing the search query and result. You might integrate an LLM into your search architecture to look something like the diagram below.

At the start of the search process, an LLM might be used for query understanding or query expansion. We can think of query understanding as cleaning up the query to focus on its core intent. This is especially useful when working on conversational search engines that might contain longer, complex queries. Query expansion, on the other hand, involves adding to the query synonyms or related concepts from a company specific ontology.

LLMs can also be leveraged after search results are returned. For applications like Google search, results are returned straight to users. However, for applications where results are summarized and presented to users in a concise answer, such as the results of conversational AI products, an LLM is required.

LLMs are powerful tools, especially for reducing cognitive load. However, the summaries they produce might not directly answer a user’s question. Additionally, because LLMs are trained on older data, they are not able to provide information about recent events. What would be the next step in enhancing this user experience? To get a cohesive, direct answer constructed based off of retrieved documents. Enter retrieval augmented generation.

Retrieval Augmented Generation

The approach you took when writing a paper in school is likely similar to the way RAG works. First, look up information surrounding the topic. Second, rely on your new learnings and previous knowledge to put pen to paper.

Applying this example to RAG: researching the topic is synonymous with the retrieval step - new data is gathered based off of the search query. Keeping in mind previous knowledge represents the LLM - the model will also use the previous data it was trained on. And writing the paper is the augmented generation step - the model uses all data to formulate an answer.

The differences of RAG compared to using just an LLM are two fold. The retrieval process empowers the LLM with additional information. The augmented generation step involves the model creating an answer to the search query, rather than summarizing or returning results.

If you want your product to generate a direct, concise answer to your users’ questions, and have the ability to gather new data, RAG is the approach for you.

Conclusion

Each approach to search provides different levels of context, summarization, and content generation. With these different features come added computational costs and latency. Just as the latest fashion trends might not fit your style - modern search innovations might not always be the best fit for your use case. As the amount of innovation in the search space explodes, it is more important than ever to keep the end customer in mind when determining how to engineer your search engine.

Additional Readings

Extensive list of LLM research papers

LLMS and RAG are great. What’s next?

3 key values to promote a customer-focused engineering culture